Russell published an interesting post about his first experience with Firebuild accelerating refpolicy‘s and the Linux kernel‘s build. It turned out a few small tweaks could accelerate the builds even more, crossing the 10 second barrier with Linux’s build.

Build performance with 18 cores

The Linux kernel’s build time is a widely used benchmark for compilers, making it a prime candidate to test a build accelerator as well. In the first run on Russell’s 18 core test system the observed user+sys CPU time was cut by 44% with an actual increase in wall clock time which was quite unusual. Firebuild performed much better than that in prior tests. To replicate the results I’ve set up a clean Debian Bookworm VM on my machine:

lxc launch images:debian/bookworm –vm -c limits.cpu=18 -c limits.memory=16GB bookworm-vmCompiling Linux 6.1.10 in this clean Debian VM showed build times closer to what I expected to see, ~72% less wall clock time and ~97% less user+sys CPU time:

$ make defconfig && time make bzImage -j18 real 1m31.157s user 20m54.256s sys 2m25.986s $ make defconfig && time firebuild make bzImage -j18 # first run: real 2m3.948s user 21m28.845s sys 4m16.526s # second run real 0m25.783s user 0m56.618s sys 0m21.622s

There are multiple differences between Russell’s and my test system including having different CPUs (E5-2696v3 vs. virtualized Ryzen 5900X) and different file systems (BTRFS RAID-1 vs ext4), but I don’t think those could explain the observed mismatch in performance. The difference may be worth further analysis, but let’s get back to squeezing out more performance from Firebuild.

Firebuild was developed on Ubuntu. I was wondering if Firebuild was faster there, but I got only slightly better build times in an identical VM running Ubuntu 22.10 (Kinetic Kudu):

$ make defconfig && time make bzImage -j18 real 1m31.130s user 20m52.930s sys 2m12.294s $ make defconfig && time firebuild make bzImage -j18 # first run: real 2m3.274s user 21m18.810s sys 3m45.351s # second run real 0m25.532s user 0m53.087s sys 0m18.578s

The KVM virtualization certainly introduces an overhead, thus builds must be faster in LXC containers. Indeed, all builds are faster by a few percents:

$ lxc launch ubuntu:kinetic kinetic-container ... $ make defconfig && time make bzImage -j18 real 1m27.462s user 20m25.190s sys 2m13.014s $ make defconfig && time firebuild make bzImage -j18 # first run: real 1m53.253s user 21m42.730s sys 3m41.067s # second run real 0m24.702s user 0m49.120s sys 0m16.840s # Cache size: 1.85 GB

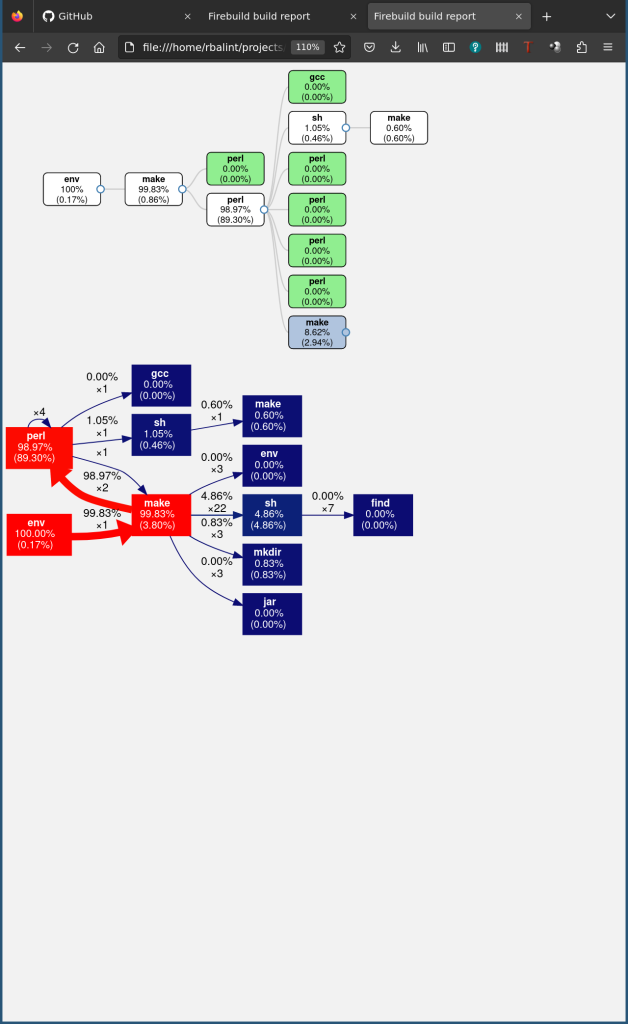

Apparently this ~72% reduction in wall clock time is what one should expect by simply prefixing the build command with firebuild on a similar configuration, but we should not stop here. Firebuild does not accelerate quicker commands by default to save cache space. This howto suggests letting firebuild accelerate all commands including even "sh” by passing "-o 'processes.skip_cache = []'” to firebuild.

Accelerating all commands in this build’s case increases cache size by only 9%, and increases the wall clock time saving to 91%, not only making the build more than 10X faster, but finishing it in less than 8 seconds, which may be a new world record!:

$ make defconfig && time firebuild -o 'processes.skip_cache = []' make bzImage -j18 # first run: real 1m54.937s user 21m35.988s sys 3m42.335s # second run real 0m7.861s user 0m15.332s sys 0m7.707s # Cache size: 2.02 GB

There are even faster CPUs on the market than this 5900X. If you happen to have access to one please leave a comment if you could go below 5 seconds!

Scaling to higher core counts and comparison with ccache

Russell raised the very valid point about Firebuild’s single threaded supervisor being a bottleneck on high core systems and comparison to ccache also came up in comments. Since ccache does not have a central supervisor it could scale better with more cores, but let’s see if ccache could go below 10 seconds with the build times…

Well, no. The best time time for ccache is 18.81s, with -j24. Both firebuild and ccache keep gaining from extra cores up to 8 cores, but beyond that the wall clock time improvements diminish. The more interesting difference is that firebuild‘s user and sys time is basically constant from -j1 to -j24 similarly to ccache‘s user time, but ccache‘s sys time increases linearly or exponentially with the number of used cores. I suspect this is due to the many parallel ccache processes performing file operations to check if cache entries could be reused, while in firebuild’s case the supervisor performs most of that work – not requiring in-kernel synchronization across multiple cores.

It is true, that the single threaded firebuild supervisor is a bottleneck, but the supervisor also implements a central filesystem cache, thus checking if a command’s cache entry can be reused can be implemented with much fewer system calls and much less user space hashing making the architecture more efficient overall than ccache‘s.

The beauty of Firebuild is not being faster than ccache, but being faster than ccache with basically no hard-coded information about how C compilers work. It can accelerate any other compiler or program that generates deterministic output from its input, just by observing what they did in their prior runs. It is like having ccache for every compiler including in-house developed ones, and also for random slow scripts.